AI and the Cybersecurity Landscape

There have been countless articles posted about the new AI chat bots in the past few months, and, since those bots became available to the public, there have been many interesting and exciting demonstrations built on top of those systems.

But, and there is always a “but,” there are some very worrying considerations from a cybersecurity perspective, both in the bots’ infrastructure itself and in the (ab)uses that can come from them.

Chat “bots” or just very lucky gamblers?

“Bots” is a term that has many implications. From our collective experience with robots, first with sci-fi examples and then with the actually deployed robotic systems across different industries, we “expect” that (ro)“bots” are capable of, at the very least, performing autonomous repetitive tasks in a predictable way.

Chat bots, on the other hand, behave slightly differently. One day you may prompt them for “an introduction to the next Moby Dick” and get one result, and the next day – or 2 minutes later for that matter – the same prompt will give you radically different results. That is not how one expects bots to behave.

Why is that, I hear you ask? Well, it turns out that “chat bots” are, at their core, glorified machine learning language models. In simple terms, they are very good at identifying rules like “grammar” and “guessing” what “word” is likely to follow in a given context. You surely noticed how “grammar,” “guessing,” and “word” were between “ “. It’s because the term applies to similar rules in image editing, sound, or video – just as it does for text. The same conversational model underpinning the current generation of chat bots is equally capable of generating an image given a prompt as it is a paragraph of text. It’s all about the rules and guessing what best would fit those rules next.

So, how does this explain different responses to the same prompts? Well, “guessing” what comes next is just that – looking at probabilities and finding the best fit for a given prompt. And that can, and does, change over time, as the bot trains over more data, or human interactions reduce the value of a given response over another, or even simple random chance does its thing. Anyone who ever played at a casino understands that a 90% win chance still means that 10% of the time you’ll lose.

The bots are extraordinarily good at learning the rules.

The rules of the game

Cybersecurity is, at its core, a rules-based game. You have systems/software/people that follow a set of rules, and you have another group of systems/software/people that will try to exploit the first group’s rules for some gain.

Take spam and phishing emails, for example. Everyone’s seen them, everyone receives them, and hopefully spam filters will keep most of them away, but the odd one still breaks through. The spam filters will look for telltale signs that an email is spam – it will apply a set of rules and the ones that get through manage to break or avoid those rules. Whether spam filters have explicit rules defining the emails to block or some fancy heuristics-based approach, they’re still rules all the same. You just don’t create the rules directly in the second case.

One reason spam emails have been relatively easy to spot, and block, is because of the (many) obvious grammatical mistakes – no, your bank does not want “you credit card number and verification id.” So you could flag emails simply by running spell checkers on the text they contained.

But, now, freely available bots can write text with no such errors. We might have moved from flagging poorly written emails to flagging emails that have absolutely no mistakes, abbreviations, or other language tells at all. And this happened overnight.

But spam emails are not the only aspect of cybersecurity affected by the rise in popularity of these bots. Another very interesting feature of these bots, or language models, is how easy it is for those systems to translate text from one language to another. In goes text in some foreign language you’re not familiar with, and out spews the same text in perfectly readable text. More than simply a direct translation, it will (usually) understand context and provide significantly more meaningful text than previous automatic translation systems. This has the less obvious aspect of letting such a system translate computer code to plain english. Yes, a computer code is simply another language, with another set of rules. In fact, all computer programming languages are just exactly that – a set of rules. And the bots learn rules easily.

What does this mean exactly? Well, in goes the reverse-engineered code for a binary file, out comes a plain text explanation of what it does, line-by-line if you so choose. As seasoned programmers can tell you, this is unheard of, and one of the reasons why reverse engineering is a dark art. It takes a lot of effort for humans to understand, reason, and explain code they haven’t written – or even written by them a few months ago. The bots have no such issue.

So, how does this affect cybersecurity? First, blue teams have a great new tool for their arsenal. Found a new malware strain that you can’t identify? Feed the code to a bot and it will explain what it does. Interested in understanding how a competitor’s application works? Need help understanding the ACLs and firewall rules in a certain piece of network equipment?

Won’t take more than a few moments.

And the reverse is also true. Do you want to write a new reverse shell you can upload to a remote server? Something never before seen that EDR systems will not detect and won’t trigger any alerts? Give the bot the right prompt and out comes the code.

Current generation AI bots are supposed to have protections in place to avoid generating such outputs, but cleverly constructed prompts have been found to be able to work around the protections.

The glitch in the matrix

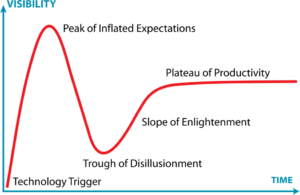

It’s not all perfect in AI land, however. We’re still riding the hype wave with these new bots, but as with everything in IT, reality checks will dampen expectations somewhat.

In the case of AI bots, the hype will likely diminish as the shortcomings become more apparent.

First and foremost, the data the bots were trained on, where they learned the rules from, is biased. This is inherently unavoidable and expected on all machine learning platforms. What this means is that all the outputs coming from chat bots are inherently biased as well. And I’m not referring to the usual human biases like prejudice and representation, and other assorted undesired behaviors – which exists too. I mean biases in that it was trained on a (obviously) limited sample of data. This will limit the range of possible answers to a given prompt.

The other very harsh reality check is that AI bots are not expert systems. In fact, they know absolutely no specific fact -at all-. Ask any of the current generation of bots for a specific piece of information and you’re likely to get a wrong or at least inaccurate answer. They are very good at predicting the next word according to the rules, but won’t “remember” a fact. Ask one for a URL to something and it will create, on the fly, a perfectly correct URL. But it will just as likely be broken, as it was just now made up.

AI bot content creation – be it text, images, code, or whatever else – does not exclude human validation. You still need to have some domain knowledge of the subject to spot the issues. The answers will be flawless at face value, but wrong. As an example, so wrong that Stack Overflow already banned AI-generated answers because they were so often wrong.

And this isn’t even touching on aspects like digital rights, accountability, or responsibility. Who owns an AI-generated answer? Who is responsible for fixing issues that come from a bad response? Who owns a new AI-generated image?

Closing remarks

We’re living through a new and fascinating era in IT. AI will, for the first time after so many false starts, finally bring us close(r) to actual usable, sci-fi level, interactions. It has the potential to increase productivity by orders of magnitude, especially in rules-based activities – and most are – where instead of doing the actual task, you simply prompt the bot and validate the output. In our cybersec field, this applies immediately to programming, threat analysis, vulnerability testing, or system assessment.

But consider that none of the freely available AI bots in this generation are internet enabled. That’s not by chance. The security implications of having a system that can, at a prompt, initiate behaviors on third-party systems are scary to many, including the companies behind the AI bots. The safeguards against racial bias, prejudice, and discrimination have been easily manipulated, the safeguards against malicious outputs (like generating malware) as well, and no one wants to be the first to have an AI-bot initiated denial of service attack in their platform. Or an AI bot initiated security probing. Or an AI hacker.

Surely, security solution vendors are looking into ways to integrate AI into their systems. How could they not? But remember the bias mentioned above? If your new AI -powered security solution tends to explain things in a particular way, it may overlook or misinterpret the data. How much or how little that matters will be entirely situational, but results should come with a very unhealthy pinch of salt.

Documentation

Documentation Login

Login